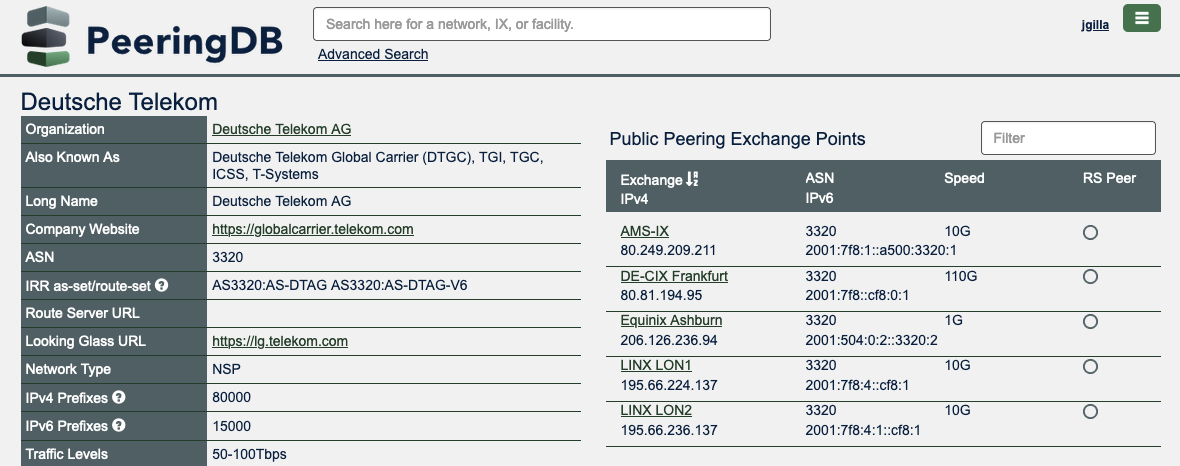

During the morning of the 8th of November 2022 multiple customers contacted me regarding connectivity issues to services pretty much all across the internet. Once I started digging through the traceroutes and MTRs of customers, I found most of them showing excessive packetloss starting at a hop resolving to “dtag.equinix-fr5.nl-ix.net”. Until that point, I have not even been aware that Deutsche Telekom (AS3320) is connected to NL-ix as they’ve neither listed their presence at PeeringDB…

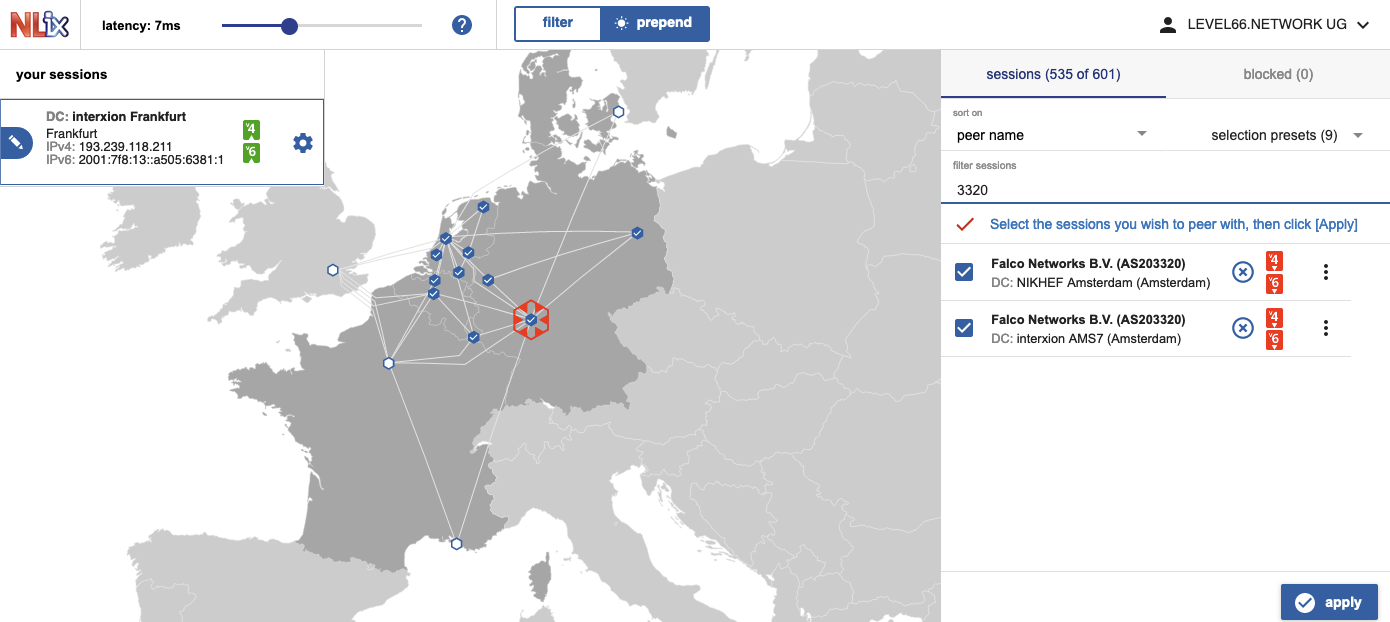

…nor is the peering listed in the session list of the NL-ix route server configurator.

To be able to analyze the issue more deeply, I needed access to a network which is connected to NL-ix, too. By chance I already run and have access to a network for my company (AS56381) which is connected to NL-ix, too. In addition to that it uses Telekom as a transit (only AS3320 routes and their direct downstreams are being imported) which makes it a perfect test-bench.

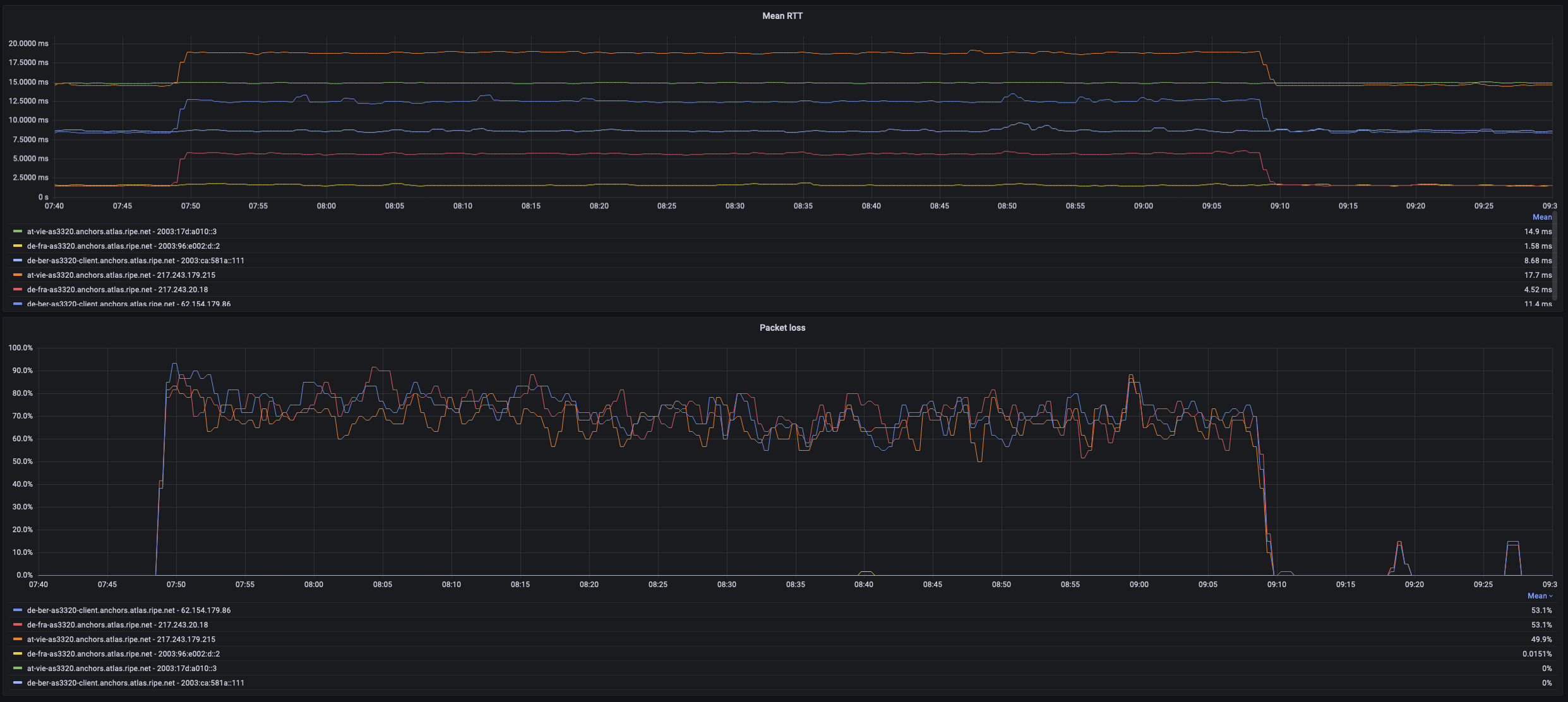

I quickly checked my already existing latency dashboard in Grafana showing the measurements created by ping_exporter targeting multiple different destinations including a bunch of NLNOG RING nodes aswell as RIPE Atlas Anchors. The dashboard of the datacenter being directly connected to NL-ix showed an increased latency as well as more than 60% of packetloss to all IPv4 targets within AS3320. Interestingly, the same targets could be reached via IPv6 without any issue.

Due to the setup of my company being distributed across multiple datacenters, I am able to compare these values against measurements directly being transported to AS3320 via the direct BGP Sessions. This shows that traffic being transported directly to AS3320 does not seem to be affected problem, too.

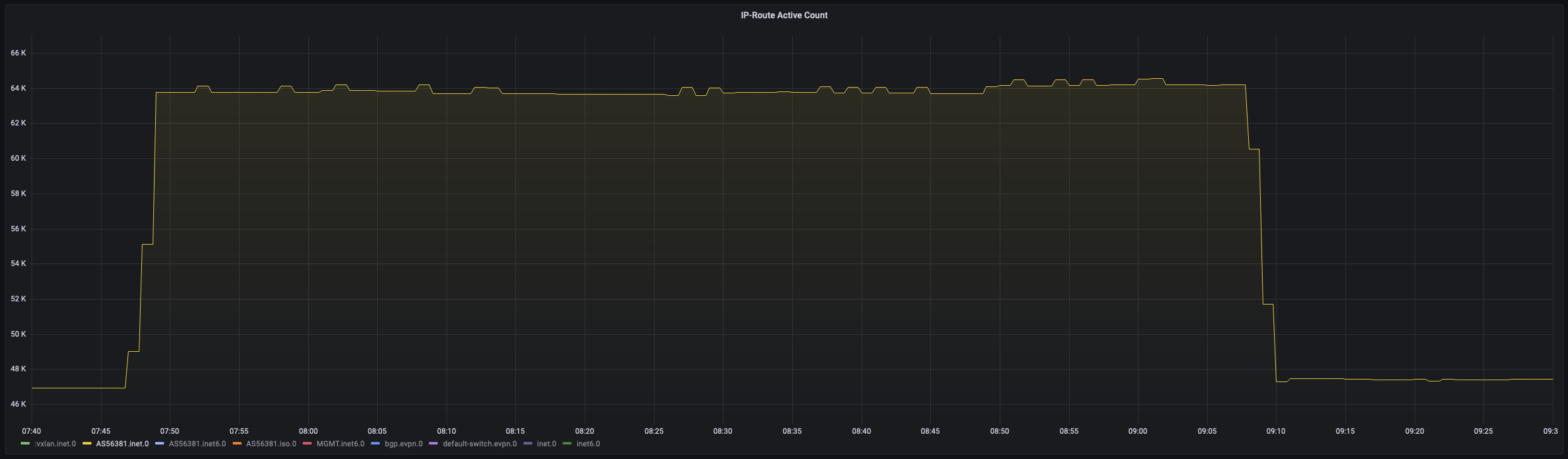

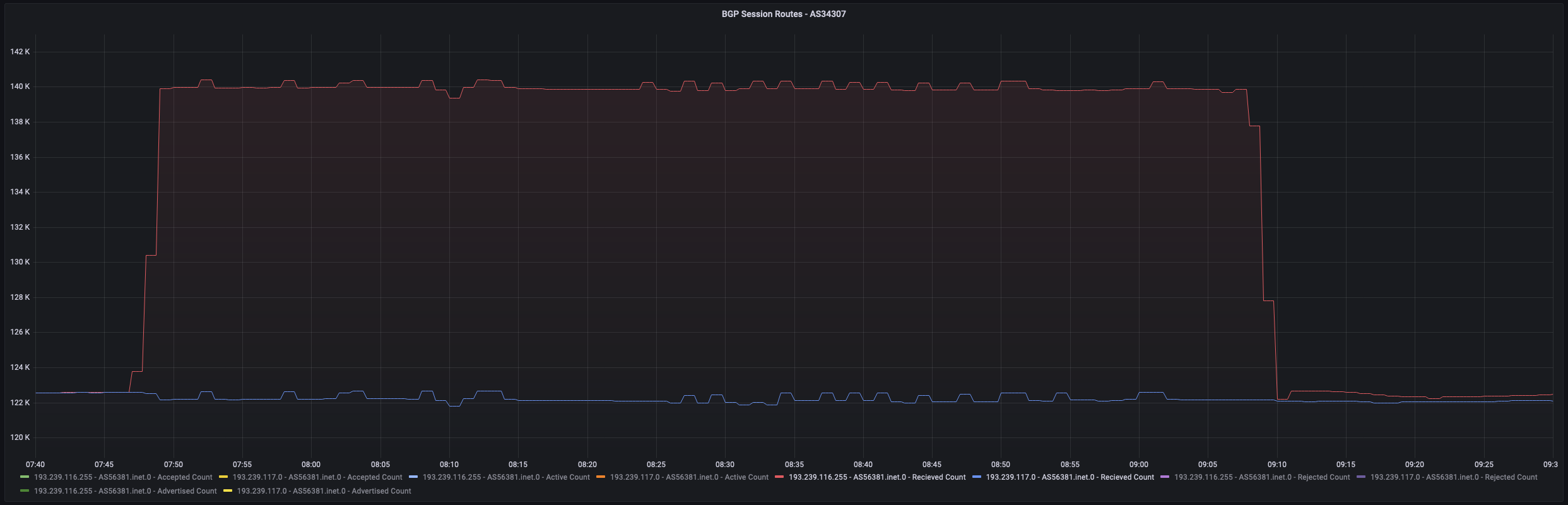

I continued to dig into my monitoring metrics and found an increased amount of routes being installed on the router connected to NL-ix starting at roughly 07:45 UTC. As the routers only have a partial routing-table (default-routes from upstreams combined with more-specific routes received at IXPs), the change in the amount of routes was quite significant. Before the change of Telekom, the amount of installed routes was at around 47.000 routes. The amount quickly increased to around 64.000 routes during the incident.

Digging even deeper into the metrics, I found the routes only being received from the first of the two route servers at NL-ix. The second redundant route server did not seem to receive or at least not export those routes at all.

I checked the routing table on the router and could quickly find the newly announced prefixes by filtering on the AS-path and looking for the IP-address of the NL-ix route-server. You can see the difference in the next-hop between the routes which were only imported via NL-ix (xe-0/0/5.2202) compared to the routes imported via NL-ix and the transit session (xe-0/0/0.618).

jgilla@de-fra4-sw2> show route aspath-regex "3320 .*"

AS56381.inet.0: 898292 destinations, 1259794 routes (57317 active, 0 holddown, 1154580 hidden)

+ = Active Route, - = Last Active, * = Both

2.17.224.0/22 *[BGP/170] 01:19:58, localpref 100, from 193.239.116.255

AS path: 3320 9121 ?, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.17.228.0/22 *[BGP/170] 01:20:28, localpref 100, from 193.239.116.255

AS path: 3320 9121 ?, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.17.232.0/22 *[BGP/170] 01:19:42, localpref 100, from 193.239.116.255

AS path: 3320 9121 ?, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.17.236.0/22 *[BGP/170] 01:19:58, localpref 100, from 193.239.116.255

AS path: 3320 9121 ?, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.19.179.0/24 *[BGP/170] 01:21:17, localpref 100, from 193.239.116.255

AS path: 3320 3320 3303 12874 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.56.11.0/24 *[BGP/170] 01:21:24, localpref 100, from 193.239.116.255

AS path: 3320 34854 213034 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.56.61.0/24 *[BGP/170] 01:20:18, localpref 100, from 193.239.116.255

AS path: 3320 9121 43260 199366 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.56.62.0/24 *[BGP/170] 01:20:18, localpref 100, from 193.239.116.255

AS path: 3320 9121 43260 199366 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.56.63.0/24 *[BGP/170] 01:20:18, localpref 100, from 193.239.116.255

AS path: 3320 9121 43260 199366 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.56.76.0/22 [BGP/170] 01:20:18, localpref 100, from 193.239.116.255

AS path: 3320 209216 209216 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

2.56.96.0/22 *[BGP/170] 01:20:55, localpref 100, from 193.239.116.255

AS path: 3320 47147 197540 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

...

5.10.220.0/24 *[BGP/170] 01:20:56, localpref 100, from 193.239.116.255

AS path: 3320 I, validation-state: unverified

> to 193.239.116.83 via xe-0/0/5.2202

[BGP/170] 05:15:17, localpref 100, from 141.98.136.1

AS path: 3320 I, validation-state: unverified

> to 141.98.136.41 via xe-0/0/0.618

As the AS-path via NL-ix is very short (as it only includes the ASN of DTAG and no other upstreams etc.), many networks selected the path via NL-ix as their best path to the network Telekom. This leads to more and more traffic being shifted from Telekoms other connectivity over to NL-ix which in the end probably caused the port of Telekom at NL-ix to be full. Finally, this caused the packetloss and the increase in latency primarily on the path to the network of Deutsche Telekom.

Once Telekom became aware of the issue, they took measures to quickly withdraw the subnet announcements to the NL-ix route server. This change became effective at around 09:10 UTC bringing the packetloss and latency back to normal values.